What if conditional branching (the if statement) had not become the dominant approach to code? What would the alternatives look like? Evan Buswell's dissertation (in progress) addresses these questions with languages from an alternate computer history that eschew the if. He named them the noneleatic languages, referencing pre-Socratic alternatives from before Western logic became locked in Platonic thinking.

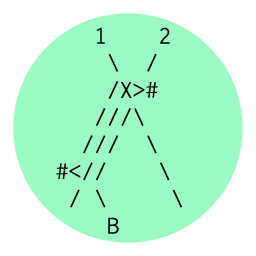

The two key noneleatic languages developed so far, neasm and nevm, are assembly and machine languages for the same virtual machine (source code here). Branching is replaced with self-modifying code. This sample program in neasm shows how we simulate branching. I've added some additional comments for clarity:

; branch.s A simulation of a conditional branch

;

; This code compares C1 to C2. If C1 is greater or equal to C2, the script

; continues to BGTE. Otherwise, it skips over BGTE and goes to BLT.

IP: start ; the first 4 bytes always indicates the instruction pointer

C1: 123 ; the first comparand

C2: 456 ; the second comparand

start:

-Iii CMP: 0 C1 C2 ; Subtract C2 from C1, put the results in CMP

>IiI MASK: 0 CMP 31 ; Take the sign bit of CMP (1 or 0) and move it right 31 times. If CMP were positive, the

; result would be all 0's, if negative, all 1's. The result is put in MASK

&UuU JMP: 0 MASK 0x40 ; JMP = 0x40 masked by MASK. For every bit that is a 1 in MASK, the corresponding bit of 0x40

; is masked to 0, but for every bit that is a 0, the original value is kept. If MASK is

; all 1's, JMP is 0. If MASK is all 0's, JMP will be 0x40. 0x40 is the length of four

; instructions (the distance from BGTE to BLT).

+uuU IP JMP BGTE ; Move IP (the instruction pointer) to the location of BGTE + JMP. If JMP is 0x40, that will be

; the location of BLT.

BGTE:

=uU 0xF000 "Grea" ; Print "Greater Than" to the screen

=uU 0xF004 "ter "

=uU 0xF008 "Than"

# ; End

BLT:

=uU 0xF000 "Less" ; Print "Less Than" to the screen

=uU 0xF004 " Tha"

=uU 0xF008 "n "

# ; End

To give a better idea of what the program looks like at each stage of execution, below is the same logic implemented in machine code (nevm). The instruction pointer is green, and orange marks the value changing at each step:

There is a more complex example, recreating the famous 10 Print Chr$(205.5+Rnd(1)); : Goto 10 program in this Critical Code Working Group 2018 thread where I first came across Evan's work.

» Can you tell me about how this idea developed? Did it grow out of experiments in blurring the line between state and code in your Live C project?

The two projects are definitely related. However, the Live C project started as a very practical coding exercise. Livecoding is catching on in the mainstream, but for a lot of applications, the real-time code is really just a C library that doesn’t change at all. I wanted to push the boundaries of what was possible with livecoding.

The noneleatic languages project came out of the typical mental stew of many things, but if I were to pick a single point, this would be my research on Von Neumann’s First Draft Report on the EDVAC. In that paper, Von Neumann offers a conditional substitution instruction, rather than a conditional branch. The idea was that, according to some condition, you would substitute out an address within an instruction. I think at this stage Von Neumann was trying to come up with an execution model that’s nodding more towards Church’s approach to the Entscheidungsproblem than Turing’s, or perhaps he was trying to bridge between them. It’s also possible that he was deliberately obscuring the conditional branch in his public presentation for various reasons. More research is needed here. But in any case, at this early, never-implemented phase, the lack of a conditional branch was at least not immediately visible to others in the field as impractical.

In thinking of the rest of the history of computer language development in these terms, it became clear to me that, far from laying anything to rest, the conditional branch is surrounded by constant related anxieties. Dijkstra’s concept of structured programming is centered about an anxiety that the conditional branch might not be enough, as is the introduction of blocks with Algol ‘58. Object oriented programming, I would argue, has a similar anxiety, where the attempt is to tie data itself to blocks of code, lest that data get out of control.

Now, obviously in part all of these anxieties are about locality, which I would argue is a fundamental problem of language as such, and not a problem unique to code. But I don’t think that explains it entirely. After all, locality problems have been solved pretty well in everyday language already; this is why we have paragraphs, sections, chapters, volumes, tables of contents, indices, etc. Nobody is really suggesting adding those types of things to programming languages. And perhaps they should. The only thing vaguely equivalent is the “file”, and although people have their opinions, the decision to put different pieces of code together in the same file doesn’t attract the same vitriol as this other kind of anxiety.

Anyway, what I arrived at is that this anxiety is most fundamentally about keeping code (the actual written program) as code (the concept of a language with a unitary and exact interpretation). Treating language as code makes it behave in a certain way, epistemically. Interpretation—or execution—of code should not be required to evaluate whether it behaves correctly. Rather, one of the fundamental purposes of code is to assure us, against our anxieties to the contrary, that we can make language objective, that we can be sure that a process is just as reliable as a record. But what impinges on this assurance is precisely the obverse of code, state, the particular values of registers and the voltages and electron movements and everything else which is a snapshot of a machine, which unfolds over time rather than being fixed, and which is particular rather than universal.

At this point, this ties in with the rest of my dissertation research, on the history of the forms of credit. At a certain point, before the invention of code, debt records were swallowed up into an increasingly large process. And right about when code was being invented, the correctness of the process of creating records started being more epistemically important than the electronic or punched card record itself. The record was recast as the result of a process, which if correct has to be respected. This general attitude was then adopted in many fields, and was present throughout the invention of code in the 1940s. So to be clear, according to my argument, historically speaking code had to form this way because of the way our financial instruments had already mutated.

The Noneleatic languages, then, are sort of an alternate history of a weird utopia in which we didn't have this anxiety between code and state. In this hypothetical universe, instead, there's an effort to conserve the representative power of the instantaneous, running state. So for these people, they're not worried about whether or not they can know the process is correct aside from it's running context, they're worried about really capturing the essence of the state and its future potentialities at any given moment.

So as for its relationship to Live C, this turned out to be coincidental, but maybe not accidental. Something about the livecoding movement, I think, is already unraveling these decades of anxiety over code and state. Or rather, livecoding is showing up now in part because that anxiety has already unraveled somewhat. And I think Live C has something to say about that code–state binary as well. Livecoding is more than continuous recompilation, it's recompilation that preserves state. The code changes, the state stays the same. One thing you find out very quickly when writing a livecoding interpreter or compiler is that there are many, many points in which the decision about what is code and what is state is somewhat arbitrary. For example, if the computer's in the middle of a running function, and you replace that function with something else, what happens? It can run out the previous function, or it can try and reinterpret the IP to point to a new location. Assuming it runs out the previous function, where does it return to? In Live C, all the function calls are atomic on the value of the function that was running when the program first started up. But one thing that means is that the all-important main() is fixed forever. It effectively becomes part of the context for the executing code, rather than a changeable part of the code itself. According to livecoding precept that the code is recompiled, but the state is not, you might even say main() is a part of the state!

» Could you give some background on how the conditional branch became the dominant mode of thinking?

Ah, I think I just did that in the previous answer. But I can give a little more detail on the early part here. Note that this is an ongoing research effort for me, so some of this might be a bit more patchy than it ought to be.

The first modern instance of what we might call programming emerged with the Hollerith (which would become IBM) unit record machines. These are machines that sort, tabulate, and calculate using punched cards. Even in Hollerith's very first census counting machine (1890), which did very little more than count holes occurring in a particular position, the electrical pulse generated from the card could be arbitrarily routed to different accumulators using a matrix of pins and wires on the back of the machine. In the 1920s and 30s unit record machines had a much more complicated plugboard wiring system. Until the 1940s, this is what programming was: routing data from one thing to another. Always the data was represented as a signal, and the program was the routing of the signal from one place to another, having no representation other than the physical connections.

The one exception to this is Babbage's Analytical Engine. This had a branch instruction which moved forward or backwards either unconditionally, or according to whether the "run-up" lever was set, which would happen according to a difference in sign between the result and one operand. This is kind of an in-between code, and I wouldn't quite consider it a conditional branch in terms of its meaning, although the effect is the same. Anyway, the potential was already somewhat present at this point.

In the 1940s, a number of machines began to represent the instructions and not just the data. First, the Harvard Mark 1 represented the program as a series of operations on different registers, inputs, and outputs. This machine read its instructions one at a time from a paper tape, but it did not have a conditional branch and so went on sequentially. Loops on this machine were accomplished, however, by using physical loops of paper tape. The ENIAC in its first iteration (1945) operated just like an oversized unit record calculator. It had many units where data would be routed by using physical plugs. However, the ENIAC had an additional operating part called the Master Programmer which essentially would switch the destination circuit from one thing to another in order to advance the program from doing one kind of thing to another kind of thing.

The EDVAC was already being designed as the ENIAC became operational, and Eckert and Mauchly had settled on a single core memory and serial operation, thus anticipating some kind of code to run it. Von Neumann's role in the EDVAC project differs according to whom you ask, but at the very least it is clear that he came up with the list of computational orders in collaboration with Jean Bartik, one of the ENIAC programmers. As I already mentioned, in the very first document about the EDVAC (1945) Von Neumann suggests a conditional substitution, rather than a branch. The EDVAC was completed with a conditional branch. But the first computer to use some version of Von Neumann's code was the ENIAC (1947-8). The Master Programmer, it turned out, allowed reconfiguration of the ENIAC so that orders could be entered into the function tables. This was done, and the first major program written for the reconfigured ENIAC (1948) was a Monte Carlo simulation of an atomic explosion Von Neumann ran to help with his other, more classified, military appointment. The reconfigured ENIAC had a "C.T." or conditional transfer order, which would transfer the code to the address in a particular accumulator if the contents of another accumulator were greater than or equal to zero. This is already fairly modern code.

As far as the further history of the conditional branch, as already mentioned two things come to mind more than any others. First, is the invention of the block instruction in ALGOL '58. This allowed the conditional transfer to become instead conditional execution. The second is Dijkstra's Structured Programming movement in the late 1960s, which is best remembered today by his letter published as "GOTO Considered Harmful." Structured programming insisted that programs operate according to their block logic, that they call functions and return via the same path they came, etc. As you probably know, there was already an esolang about this: COMEFROM. [Ed note: Interesting to note that COMEFROM -- my personal favorite method of flow control -- is a valid command in Python]

» In your research, did you find alternatives to this method? If so, how far did they get in offering practical alternatives? We touched on the URISC architecture in the critical code working group discussion, but I’m curious if there were others, especially in early days of computing. [Ed note: In the CCSWG thread, I draw a comparison to OISC systems such as SUBLEQ, his answer is there]

Historically, the main alternative to the conditional branch has been to have no branching at all. As much as this sounds like not a "real" alternative, for many purposes it worked just fine. The other historical alternative, which is still in major use within the modular synthesis community, is physically patching busses from one point to another, and then having a programmer/sequencer that switches between these busses according to an input signal.

The only other briefly considered alternative that I've heard of is Von Neumann's conditional substitutions. It isn't quite code, but we might consider Alonzo Church's lambda calculus to be an alternative. It has only substitutions, and the "code" changes as those substitutions are made. Interestingly, Church's solution to the Entsheidungsproblem seems to be more popular with most mathematicians, whereas Turing's solution is more popular with computer scientists. Gödel is supposed to have disbelieved in the universality of Turing's model until it was proven to be equivalent to Church's.

Another example we might put out there is Conway's Game of Life. Here conditionals are constantly being evaluated, but the code never really "branches."

But really, I wouldn't expect to find a whole lot of alternatives, since my argument is that our particular epistemic needs in the 1940s and after led us to the conditional branch in the first place. As I say above, the transformation of the forms of our financial records were already shifting the appearance of concreteness to processes, rather than records. So code placing its epistemic weight squarely on a static representation of process, rather than record, is a natural outgrowth.

» Is the lack of branching necessarily tied to self-modifying code? Would other languages you develop for this project also be self-modifying or do you envision other approaches?

That is a very good question. For me, anyway, the answer is yes. The whole paradigm of the noneleatic languages is that the state as a whole, including a snapshot of the running code, should be representationally available. That is, at any given moment the code should itself clearly tell us about the state that it's in. That necessarily involves an evolution of the representation of the program along with the running of the program itself.

However, as to the more general question here, of whether we can create a useful language that is neither self-modifying or involving conditional branches, I'm not sure whether we can or can't. On the one hand, it is trivial to do so. All one has to do is to say that everything which changes during the execution is state. So for example, I could have made an instruction in neasm that executes the contents of a particular register. Then, presto! The "code" is no longer self-modifying. In truth, every bit of code run on a modern computer is self-modifying, in that it modifies the state of the computer, and the choice of which part is the computer's state and which is the computer's code is somewhat arbitrary. The entirety is needed in order to reproduce any given calculation. We can even take this to its logical limit, and hypothesize a language/machine in which the only thing which ever changes is the position being executed. This would be the Eleatic-est of languages, changing as little as possible as it went through the execution. I don't know if such a language is possible, theoretically or practically, but even if it were, you still have that pesky state creep back in via the execution position. So I think the truth is that it is part of the fundamental idea of an algorithm to modify the material of the calculation, and selecting which part of that material is "self" and which is "other" is not mathematically defined. It is defined, rather, by our epistemic commitments, which try to define a concept of code by separating it from state. If this anxiety about the two vanished, then poof the notion of whether code is or is not self-modifying also vanishes.

» When you say that state (code unfolding over time) is particular rather than universal, do you mean that the latent behaviors of code are always somewhat opaque or that they are truly non-objective, and if so, in what context (to a particular machine)? I ordinarily think of state as being a consequence of the program -- that the initial state for a program should be the same from one system to another (as much as possible), and that the subsequent states are caused by the program, and so in a sense contained in the original code. So I'm curious in what sense this is non-objective.

To clarify, that whole paragraph is about appearances. Code is supposed to be universal, but state appears as a particular thing against the supposed universality of code. This manifests as having some truth in the world, but only because the assumptions about what code is are written into our technology of code in the first place. If you have a C language program, for example, in theory it will compile on any machine. But suppose you want a snapshot of a C language program at a particular point in its execution? The only way we have of reliably doing this right now is to take a snapshot of the entire memory core and all the registers, and to restore the machine to that state. So while C is portable, C state is not. There isn't an implementation of this in the toolchain, but a snapshot of a noneleatic program would be an equally legitimate program. This is part of why I implemented a freeze-frame sort of function in the interpreter for the virtual machine. At each step, you can examine the code, and in theory you know exactly what's happening and where we are. And you can understand this just from the code, you don't also need other information like the current value of variables or the current place in the execution. All of that is right there in the code itself. There would be a bit of guesswork in getting back the assembly version from the virtual machine version, but only things like "is this 7 a number or a label?" Like other assemblers, neasm doesn't really let you forget the particulars of the (virtual) machine. In a higher-level language, this wouldn't be the case. A higher level language would have to write labels directly as text, not as numbers, and so as the state of the language progressed, the representation of that state within the code would progress along with it. If you created a snapshot of a higher-level noneleatic language, (1) it would be a program equally as readable as the original program, and (2) it would be portable to any other architecture.

In theory, by the way, for something like C a debugger is supposed to get at state in a sort of portable fashion. But in practice it often does not, as a "step" might bring you in-between instructions, or more often over several instructions. And regardless, there's little to no regulation as to how this state is presented.

There's another sense in which our definitions of code and state make state into a particular thing while code always retains its universality. Code refers almost invariably to source code, to what was originally written. Whereas state quite commonly refers to many different things: variables, screen, even the state of things whose representation isn't completely accessible to the semantics of code in the system, such as whether the instruction buss is caching the results of one or another conditional branch. I would argue, in fact, that "state" can just mean every part of the actual execution of a program which isn't code. So while you might be talking about state as an abstract entity that runs along with the calculation, in practice it rarely turns out to be that. In practice when we ask about state, we might be asking "is a key currently being held down by the user?" or something like that. Haskell, for example, has to break with its pure functionality because drawing to the computer screen, sending a packet on the network, etc. just can't be neatly represented as the result of a function, but has to take place as a "side-effect," i.e. a change of state.

[Ed note: In the CCSWG discussion mentioned upthread, Buswell (prompted by Mark C. Marino) summarized his ledger argument, which is worth reprinting here]

A little background first. This project is trying to develop a materialist notion of ideology, without falling back on reflectionism. Instead, I'm trying to look at places in society where ideas or representations always-already mediate the material: contracts, some kinds of accounting, money, etc. In these places we see an otherwise ideological object (writing on paper, bits on a machine), take on a material characteristics. A paper $10 bill for example is different from a $5 bill only through the design printed on it. Its effect in the world is not really dependent on anyone's interpretation of the printed design, but that design nevertheless has an ideological effect. By existing always-already in relation to both material and ideal, these objects always implicitly advance an argument about epistemology. So as the material circuits in which these objects are situated change, the objects themselves change in both material and ideological ways. As a consequence, the epistemic ideology they present also shifts.

In the US prior to about 1855, and at pretty similar dates in the rest of the world, the best way for banks to loan out money was by printing notes. After about 1855, mutatis mutandis, bank accounts became the preferred way to loan money (and checks the preferred means of transferring in the US and a few other places, other kinds of giro schemes and proxies in the rest of the world). Thus, your money became a line on a ledger. That has an ideological effect that I won't get into here. Because of the difficulties of scaling a ledger system (incidentally the same difficulties that led to the widespread adoption of NoSQL databases ten years ago), material changes in what a ledger was and how it was handled were necessary. This technical change took a while to eclipse the single, bound book method, but by the late 1930s, even if there were still physical bound ledger books in many places, bank accounts looked less like a series of books, and more like a complex system of slips, binders, punched cards, etc. It is in constant awareness of that systematicity that code develops. Looking just a little bit earlier, however, the chief anxiety in the adoption of all these new devices and methods is alterability. A book is a record. A system is not. The question therefore became: how do we make system and record into the same thing. Or rather, that is the resulting ideological anxiety. Causally speaking, by record-keeping acquiring a systemic character, systems became records. Interpretation of this fact followed.

Code is not, actually, a system which is also a record. Much to the chagrin of Critical Code Studies, code is continually experienced as opaque, and judged by the organization and character of its human developers, who get to stand in for the whole system. But it is influenced by that anxiety between system and record. In code, that anxiety is recapitulated as the anxiety between code and state.

» In the livecoding answer, you say "because that anxiety [over code and state] has already unraveled somewhat" -- could you expand on this a bit? How are we seeing this trend?

It's more just an intuition at this point, but I'll try to expand a little. I think so-called "scripting languages" like Python and Ruby (and Perl) are doing something a little different than those which came before them. They're pragmatic in a different way, and they're "multi-paradigm" (supposedly). The Ruby community is all about completely abusing the language to write domain-specific languages—this is seen as a feature. Compare this to the vitriolic debates you see in some places as to whether it's good practice to make use of something that in C has "undefined behavior." Lot's of people think this is the worst thing you can do. So I'm not sure exactly the direct relationship between these changes and the code/state anxiety, but code is in general no longer really being treated as the crystal palace it once was.

» One thing that occurs to me are places where language is not objective due to differences between how the language is defined and the particulars of a compiler. Microsoft C and the C of GCC are two different languages, for instance (as I discovered recently), in what characters can be used in a variable name -- people with other first languages might need to make changes to their programs in order to compile in GCC. Also, as Nick Montfort has pointed out, whether an empty file is a valid C program depends on what compiler you use.

Yes, absolutely. I actually wrote a paper long ago about the concept of undefined behavior in the C standard (although note that what characters can be used in a variable name is not undefined so Microsoft is just plain wrong, apparently). In theory, you're supposed to write C such that undefined behavior doesn't occur. But in a lot of types of programming undefined behavior turns out to be fairly well defined in practice. Sometimes, even, the lack of definition is precisely what you want, since "undefined" is after all a type of abstraction. Cf. Duff's device, but especially the various techniques in the implementations of math.h which use integer arithmetic on floating point numbers.

However, I wouldn't say this is "not objective." "Undefined" refers to our subjective expectations; objectively there is a definite result.

I should probably address "objective" "appears" and all of those sorts of claims and what I mean, so there isn't confusion. In my thinking, there isn't an opposition between "subjective" and true, or "ideological" and true, or "appearance" and true, or "cultural" and true, or even "cultural" and mathematical. Sometimes people object to what I'm doing on the grounds that the development of code is scientific or objective, on the grounds that the development of code has to do with real concerns in the real world, etc. I don't disagree with that at all. You can calculate the circumference of a circle as 2 times pi times r, or as tau times r; whether you choose tau or pi is somewhat cultural, but it is also scientific, and neither choice is simply some random number. So with structured programming, for example, Dijkstra was really fighting a real thing. And he wasn't doing it arbitrarily, he was carefully considering the real situation in which he found himself, and prescribing various practices that would address that situation. But that situation was always-already cultural. In a certain sense, the anxiety about state infringing on code is an objective, practical concern. Once code and state are separated in the way that we separate them, when state infringes on code it really does cause us to have a more difficult time understanding what happens with the code. And code is the way we think about algorithms, and it's a useful way to think about algorithms. So I'm not trying to split the practice of programming into an objective and scientific part, and a subjective cultural part. All of it is scientific, and all of it is cultural. What I'm imagining with the noneleatic languages is a different science, which developed out of a different culture, and which, from the point of view of this science and this culture is going to appear flawed—but the reverse is true as well, as I'll get to below.

» In the other discussion, you say that our modern architecture is more like Von Neumann than Harvard; could you talk a bit about that? And I'm curious if you consider unusual or theoretical hardware scenarios for your languages? Would you look at, say, ternary machines, or perhaps even more obscure / theoretical / physically impossible architectures for future noneleatic projects?

Yeah, definitely. So, in Eckert's design document for the EDVAC (unlike Von Neumann's document, who being the more famous one got his name attached to the architectural model), there's a fairly brutal adherence to a single principle: non-replication. The idea was to set up a machine that operated a very small number of circuits which passed information to each other serially, because this meant that as the machine was operating, more of its parts would be in use at any given time. Therefore the machine would operate serially, so that most of the machine could be constructed from circuits that operated on one bit at a time. It would thus maximize cost-effectiveness, since the circuit didn't need to be duplicated for each different digit. Of course this also meant there would only be one memory system, flat, holding code and data. Prior to this point, machines fell roughly into two categories. On the one hand, there were the business machine types of computing devices, where basically data is on a bus that you hook up between different operations, wiring your program into the machine. Code has no representation other than the wires. The ENIAC was designed this way originally. The other kinds of machines, like the Harvard Mark 1 and all of Babbage's machines, would read the instructions from one place and use them to operate on data obtained from some other place. Our computers all have a single memory, holding both code and data, and so the EDVAC (or "Von Neumann") architecture won out.

But the EDVAC machine inherently does not separate code and state. It has a single memory in which they are intermixed. We nevertheless customarily impose that separation on top of the EDVAC architecture. Most processors now are able to rigidly enforce this separation, having a code area which cannot be written to, and a data area which can be written at will. Because of this, self-modifying code sometimes has to jump through strange hoops, like mapping memory pages with very specific flags, in order to arrive at the possibility of self-modification. So the EDVAC architecture won, but we still act as if we have totally separate code and data parts of the memory.

A noneleatic outlook would start with the hardware, and nevm is that hardware. It's not that radically different than currently existing hardwares, but there are a few differences. First, there are no registers whatsoever. There are no parts of the processor that hold temporary results, flags, etc. The single, flat memory has exactly one special location: the IP, which is at location 0. There is therefore no special place where state is stored separately in the machine at all, just a single memory which is code and state all at the same time. I think I could have imagined something more radically different, but the goal of this project was to try to change just this one thing, the separation of code and state, rather than imagining an arbitrary alternative to the current state of affairs.

» We are always extrapolating the code -- running the code in our heads to understand its potential behavior. I'm curious, from your experience working so closely on this code, if the noneleatic code unrolls in your head in a clear way and that perhaps if we had worked with noneleatic systems from the beginning, would we perhaps find them more readable. How much of this is due to the historic decision to go with the conditional branch and how much is the inherent readability of one system or the other. Also, while this is theoretical research, are there certain problems that noneleatic code may be better at describing or understanding than conventional code?

Well right now noneleatic code is pretty unreadable. But after writing a few programs, it does unroll in my head in a relatively clear way. One of my goals from the start was to try not just to create the language, but to also see if I couldn't enter into the idioms that a longtime user of that language might use for this or that type of thing he wanted to accomplish. I think I've done this fairly well, although erasing twenty plus years of training is of course impossible. I do think that some of the unreadability has to do with the fact that all I have so far is a machine and an assembly language, and most people don't consider those all that readable in the first place.

But I think also this noneleatic system would have different values and would think about readability in a different way. Someone used to the perspective of the noneleatic languages would be extremely confused looking at our languages. Where is the state? But maybe this person figures out how to write a simple program anyway. And then they're debugging it, and they'd ask you, "hey, how can I get a snapshot of the program at a particular point"? And you'd refer them to a debugger. And they'd say "no, I mean like a printout of the whole program at this particular point", and you'd refer them back to the debugger. And it would seem like a monofocused microscopic insight into the state of the program, and they'd look at you like you were crazy for accepting this terrible tool. So it might be that here one kind of readability is being sacrificed for another.

My suspicion is that unrolling a program in one's head would be less important if state was always transparent. But also, in the words of Fred Brooks, "Show me your flowcharts and conceal your tables, and I shall continue to be mystified. Show me your tables, and I won’t usually need your flowcharts; they’ll be obvious." In the modern context, this usually is interpreted to mean that keeping more of the program in better defined data structures is generally the best way to get readability. And the noneleatic languages definitely push towards this.

Addressing technical problems was definitely not my focus with these languages. Nevertheless, as I've already alluded to, they could be useful if you need to have human-readable snapshots. But I definitely think there's an eleatic way of doing this as well.

» What is the academic landscape like in 2018 for the cultural studies approach to code?

I'm not sure there's a particular "cultural studies" approach to code. But cultural studies in 2018 is very multidisciplinary. I think more and more people in the humanities are realizing that code is symbolic language, created by humans, and that we in the humanities know a thing or two about languages and symbols created by humans. That's the basic tenet of critical code studies, in an overly distilled form perhaps.

I think the main challenge we face in the humanities is the same as for any multidisciplinary project: acquiring enough expertise in our object of critique to be able to truly understand it. Many people have expertise about media theory, for example, that puts them in a very good position to understand code, but they don't actually know how to code. Sometimes this turns out OK; sometimes I feel like it's a bit of a disaster. There are always techniques for responsibly working around knowledge you do not possess in order to make the biggest impact with the knowledge you do possess. That, along with acquiring as much expertise as possible, is the essence of multidisciplinary.

I should note, however, that I think computer science is suffering just as much as any other discipline from the difficulties in being multidisciplinary. It's just something that every discipline in the academy is working on right now. Currently, I think that's felt the most in the portion of computer science which deals with video games, where one needs the concepts of narratives, game mechanics, affect, etc. to intersect with the process of coding. But I think the absence of multidisciplinarity is palpable across computer science as a whole. As an example closer to our interest in programming languages, the fundamental task for the vast majority of graduates, who will go on to work in industry, is to be able to clearly and quickly read and write incredibly large programs. This is about composition, and reading comprehension, and I think computer science as a discipline, with a few individual exceptions of course, is not very well prepared to teach or research such things.

» For people interested in this idea, what should they read? Is there other work out there now that is particularly relevant?

Eventually I'll publish something. But until then, there are a few other completely different takes on the cultural nature of code and its contingent history. One of the most well-thought out is Wendy Chun's Programmed Visions. David Golumbia's The Cultural Logic of Computation is pretty interesting as well. Mark Marino is currently writing a Critical Code Studies book that I'm sure will be great. On the history of computing devices I would recommend Martin Campbell-Kelly's Computer: A History of the Information Machine. The history of programming languages has fewer texts than a lot of the rest of computing history, but there's a few conference proceedings from conferences entitled "History of Programming Languages" or "HOPL."