"It is well-known that the x86 instruction set is baroque, overcomplicated, and redundantly redundant. We show just how much fluff it has by demonstrating that it remains Turing-complete when reduced to just one instruction."

So begins mov is Turing-complete by Stephen Dolan, a short paper that proves any program executable on a conventional computer can be written with a palette of only one assembly instruction in x86. It also illustrates how to program with the mov (for "move") instruction alone, working through the practical aspects of this bizarre approach to programming in a surprisingly readable way.

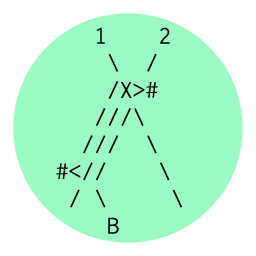

Dolan, a programming language researcher at the University of Cambridge, makes the point that mov is actually quite complex. The "fluff" Dolan alludes to in the quote above is the variation of addressing modes. Mov can load the content of a memory cell into a register, or simply load the address of that cell into a register (like the lea command does). While this might sound like a minor difference, the first example in Dolan's paper shows that using the two addressing modes together allows us to do a comparison: the equivalent of an "if" statement, clearly not what mov was intended for. He accomplishes this with two symbol cells, pointed to by two different registers. The "value" of the symbol is in where it points in memory; we test whether two variables have the same value by seeing if their symbol cells point to the same place in memory.

At top, registers A and B point to different values, at bottom, they are equivalent

First, 0 is copied into the cell pointed to by Register A and 1 is copied into the cell pointed to by Register B. Then we look again at the value pointed to by A. If it's still a 0, A and B point to different places, but if it's a 1, they point to the same place: copying into B replaced what was copied into A. Since the value of the cell has no meaning in this symbol cell architecture, we can set that value to 1 or 0 without altering our program.

m/o/vfuscator

Dolan finishes the paper with a tongue-in-cheek call for action to write a mov-only compiler. Another researcher, with the confusingly similar-looking name Domas, picked up the gauntlet. Chris Domas is a cybersecurity expert at the nonprofit Battele Memorial Institute, a job which he says gives him "time to explore the fringe areas of cybersecurity." There is little so fringe as his movfuscator (pronounced like "obfuscator"), the compiler he constructed to output only mov instructions. The first version of movfuscator compiled brainfuck. While brainfuck is the classic esolang used for Turing Completeness proofs, it's impractical and quite low-level, so serves here primarily as an initial experiment in translating the behaviors covered by the assembly commands into movs: incrementing, branching, etc. Version 2 takes the leap to compiling C, a far more ambitious work; now we have function calls, data structures, and can write our all-mov programs in one of the most widely used programming languages of all time.

The example program Domas shares in his overview is a primality test (determining which in a set of values are prime), written in C. Compiling it in (the widely used C compiler) gcc generates thirty lines of assembly code. Movfuscator produces 150+ lines of assembly -- all mov instructions, of course. The strategy Domas uses for branching (drawn from the original paper) is can be compared to what we saw in Evan Buswell's piece. Buswell turns on and off sections of code to run by masking out jump commands. Domas can't do this with movs, so actually executes every one of the "skipped" statements, but first switches the pointers to a scratch memory space so they won't affect the "real" program data. Domas illustrates the difference the control flow graphs below. The movfuscator output is a straight line; every iteration of the program follows the same enormous loop.

Control flow graphs of the primality test program in gcc (left) and movfuscator (right)

As one can imagine, this is not at all efficient, and the movfuscated version of the code is far slower, as shown in his branchless (movfuscated) DOOM, which runs just like the original game, except that each frame takes 7 hours to write to the screen. Click a key and wait seven hours to see the result. Domas assures us this version of the game is "exploit hardened," in that it is entirely secure against the Meltdown and Spectre CPU vulnerabilities. After all, these attacks work on speculative branching; as there is no branching for the chip to optimize, the exploit can't happen.

Domas's project has received some notoriety, mostly due to his rapid-fire talk at Shakacon on movfuscator and its follow-up project reductio, discussed below. The video (embedded below) is worth watching for a more in-depth discussion of technical challenge of the project. Domas went so far as to create floating-point handling -- normally not usable with movs -- by writing an enormous (500,000 mov instructions!) Arithmetic Logic Unit.

The project has received enough attention that two other programmers, Julian Kirsch and Clemens Jonischkeit, responded with a demovfuscator which analyzes code compiled to all movs and attempts to recreate traditional control flow. As astonishing as movfuscator itself is, unworking the seemingly undifferentiated flow of movs into something traditional is an incredible feat.

Chris Domas discussing reductio at Shakacon

reductio ad absurdum

After creating movfuscator, Domas looked more deeply at the polysemy of mov that had made movfuscator possible, and decided to start stripping down the alternate meanings of the command in an attempt to "extract its essense." He removed 8 and 16-bit addressing, and eliminated the multiplicity of addressing options, creating his own artificial MOVE instruction, pronounced "big move." MOVE always copies from one scratch location to another, so in x86, it's implemented as two movs: a read and a write. It takes four arguments, two addresses and two offsets.

His all-MOVE programs are similar in idea to the all-mov ones. But now that every command is stripped down to these four parameters, a program using this approach becomes nothing but the list of these numbers. The program is entirely determined by its starting state, to the content of the data table which determine where data will be mov-ed to. He approached this idea starting with the observation that two different sets of code can accomplish the same thing. He built reductio ad absurdum to answer the question of whether the inverse was true: if the same code path code accomplish multiple, seemingly incompatible things. Domas jokes how this gives programmers the ultimate excuse. "Someone asks you did you finish the code for something — Yep, it's all done. I just have to finish the data table."

If we think of the "program" in reductio as simply this list of numbers, the data table which determines its behavior, this sounds somewhat familiar from the OISCs. The One Instruction Set Computers, languages like SUBLEQ or BitBitJump, have programs are just lists of numbers; the operands for their single (unnamed) command. These commands are Frankenstein amalgams of ordinarily distinct instructions, only called in a strict order: usually some form of copy, compare, and branch based on the comparison results. Compiled to x86, they produce a varied list of assembly instructions. The beauty of reductio is that the programs, however different their behavior, are actually completely identical: they have the same assembly profile. Like movfuscator, reductio is reminiscent of JSFuck: a latent ability of x86, discovered by one person, and brought to its logical conclusion—through great effort—by another.

Domas, a security researcher, is particularly interested in how this can be used to bypass security protocols. When code becomes data (has identical behaviors as the assembly level), it becomes quite difficult to see what that code is, rendering reverse engineering tools inefficient or useless.

Assembly code for AES encryption (left) and Minesweeper (right)

Mark Barnes has ported movfuscator to the Harvard architecture. This means reductio can be ported as well -- which feels particularly apt, as the division between code and data is built into the Harvard architecture itself. Reductio shows how fluid these two concepts are, that any clear-cut division can be undermined, and the types of overflow exploits this architecture is resistant to actually can happen if the code is written strangely enough.

Reductio is a bit of an odd name for the piece. It succeeds at making all programs the same. But does it do the second half, to prove their absurdity? Perhaps the absurdity is in the labor of programming: by reducing away "programming" to the construction of identical programs, it makes strange and ridiculous this labor. Reductio moves computing away from the indexicality of instructions to the behavior they represent; essentially the instructions lose all meaning; there is no way to examine them and determine what the programs we write will do. This is replaced with a form of abstract computing, which puts the building blocks of computation in a new light.

As Domas puts it, "We've all been brought up to think when I'm writing a program, I write code that tells the processor what to do. And I write different code so the processor can do different things. But that doesn't necessarily have to be the case, as we saw here. The exact same code can actually do pretty much anything ... If the code that we write makes no difference, what does it mean to program something?"