“It is by no means obvious that in order to be intelligent human beings have solved or needed to solve the large data base problem” - Hubert Dreyfus

In the late 60s, AI had failed to meet many of the predictions made a decade before. There were no programs discovering new mathematical theorems, or playing chess at more than a dopey amateur level, or processing more than the most rudimentary natural language – most importantly, it had not become self-learning. AI researchers remained enthusiastic, blaming the slow growth on hardware. Hubert Dreyfus, however, predicted that AI, as it was conceived then, had already hit its limits, that it was grounded in a flawed model of intelligence.

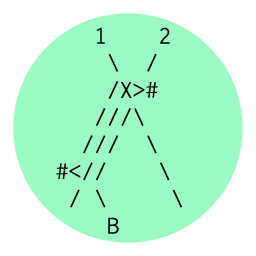

According to Dreyfus, computer scientists had unwittingly adopted a Rationalistic view of the mind dating back to Plato. A key strategy of “Good Old fashioned AI” (GOFAI), as Dreyfus called it, was to build trees of information and use search algorithms to scan across the tree to collect information: a dog is a mammal, a mammal is an animal, etc. This mimics the concept of mind that Plato had put forth: the mind holding a map of the world as we understand it, which we use to rationalize and come to an understanding of a given situation.

In Dreyfus’s "What Computers Can’t Do” (1972), he encouraged AI researchers to consider other models of the mind, including Heidegger’s concept of “thrownness.” When we want to hammer a nail, we don’t recall data about hammers, analyzing their history or associated facts; instead, we pick up an object that we barely register in a linguistic form, thinking of it only in terms of its current utility (a “driver-of-nails”). This is the difference between cognition and reasoning. Likewise, when playing a game of chess, we don’t mentally run through the 20,000 possible outcomes from a given scenario. Instead, we focus on parts of the board that feel wrong, based on our experience of playing the game – expertise lets us hone in on what’s important, rather than considering every possibility. While his critique was seen as ungenerous at the time, he was more of less proven correct: Connectionism, the statistically-based competing model of AI (then in early stages), now dominates the field.

One of the early successes of AI (in the GOFAI era) was Terry Winograd’s SHRDLU, a program where one orders an mechanical arm to manipulate differently shaped blocks in an artificial space (all of this simulated through text). SHRDLU could understand what blocks you mean by what you were saying previously, despite the fact that many blocks are identical – in other words, it could understand context. However, like many of the other early AI successes, it would not scale; adding new elements to that contained world quickly ran into a database too large to manage on hardware of the time.

By the 1980s, Winograd was one of the AI pioneers turning away from GOFAI, and he (along with fellow Stanford engineer Fernando Flores) wrote "Understanding Computers and Cognition.” A key point of Winograd and Flores is a lesson from the Speech Act Theory of Austin and Searle: language is not only (or perhaps even primarily) about the exchange of information; we speak for many reasons other than to trade data with other nodes. While this may sound obvious, it was not clear to computer scientists in the 1970s.

The classic example from Austin is the performative utterance: we can can make a promise, name a ship, etc.: make something occur in the world, rather than state something with a truth value. While this seems friendly to code (we’ve looked at Speech Act Theory previously on this blog, in terms of the performativity of the text of code), it also pushes the “exchange of information” quality of text to a secondary attribute. Drawing from Habermas, he shows how most statements are not true or false but rather felicitous or misleading, depending on the shared context of speaker and listener in terms of culture, personal history, and other factors that are not so easy to represent or evaluate in AI.

This problem of context is still relevant in AI: an “intelligent” personal assistant like Alexa can understand phrases in many different voices (a problem more easily solvable through statistical techniques) but is worse than a four-year-old child in understanding what it is we’re talking about. We have learned to speak in an absurdly specific (and often patronizing) way to get such a system to respond appropriately. If we could get Alexa to understand context better, it would perhaps overly humanize her, creating a creepy, a verbal uncanny valley.

So how is this relevant to esolangs?

As designers of programming languages, we’re building interfaces between person and machine at much more raw level than an Alexa. Here, we are even more squarely on the machine’s turf, translating our intent into discreet, logical steps. Looking at the missteps of designers working the other way, we can see the implicit assumptions about language – how we use it, why we use it, where its ambiguities lie – and it could give great material for exploring that chasm of understanding in languages that mediate between person and machine.